本文记录一次无源码的Linux内核模块bug分析与复现,定位并确认dirpro模块使用了错误的方式查找task_struct,最后给出正确的查找方式。

现象 客户一台服务器出现cpu负载数值持续升高到手动重启时达到接近400、cpu使用率只有百分之三点几、ssh无法连接的情况,重启后只拿到了message日志如下。

可以看到cpu1处于内核态死循环,最上层栈是dirpro内核模块的函数get_process_name,次一层是同模块的new_sys_kill。

message 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 May 23 18:36:31 CTL_AH_225_079 kernel: BUG: soft lockup - CPU#1 stuck for 67s! [wsssr_defence_d:27392] May 23 18:36:31 CTL_AH_225_079 kernel: Modules linked in: resguard_linux(U) secmodel_linux(U) syshook_linux(U) nls_utf8 dirpro(U) xt_multiport tcp_diag inet_diag xt_comment nf_conntrack_ipv4 nf_defrag_ipv4 xt_state nf_conntrack iptable_filter ip_tables cpufreq_ondemand acpi_cpufreq freq_table mperf bonding ib_ipoib rdma_ucm ib_ucm ib_uverbs ib_umad rdma_cm ib_cm iw_cm ib_sa ib_mad ib_core ib_addr ipv6 microcode power_meter acpi_ipmi ipmi_si ipmi_msghandler iTCO_wdt iTCO_vendor_support ses enclosure sg sb_edac edac_core i2c_i801 lpc_ich mfd_core shpchp igb dca i2c_algo_bit i2c_core ptp pps_core ext4 jbd2 mbcache sr_mod cdrom sd_mod crc_t10dif ahci aacraid wmi dm_mirror dm_region_hash dm_log dm_mod [last unloaded: nf_conntrack] May 23 18:36:31 CTL_AH_225_079 kernel: CPU 1 May 23 18:36:31 CTL_AH_225_079 kernel: Modules linked in: resguard_linux(U) secmodel_linux(U) syshook_linux(U) nls_utf8 dirpro(U) xt_multiport tcp_diag inet_diag xt_comment nf_conntrack_ipv4 nf_defrag_ipv4 xt_state nf_conntrack iptable_filter ip_tables cpufreq_ondemand acpi_cpufreq freq_table mperf bonding ib_ipoib rdma_ucm ib_ucm ib_uverbs ib_umad rdma_cm ib_cm iw_cm ib_sa ib_mad ib_core ib_addr ipv6 microcode power_meter acpi_ipmi ipmi_si ipmi_msghandler iTCO_wdt iTCO_vendor_support ses enclosure sg sb_edac edac_core i2c_i801 lpc_ich mfd_core shpchp igb dca i2c_algo_bit i2c_core ptp pps_core ext4 jbd2 mbcache sr_mod cdrom sd_mod crc_t10dif ahci aacraid wmi dm_mirror dm_region_hash dm_log dm_mod [last unloaded: nf_conntrack] May 23 18:36:31 CTL_AH_225_079 kernel: May 23 18:36:31 CTL_AH_225_079 kernel: Pid: 27392, comm: wsssr_defence_d Not tainted 2.6.32-754.6.3.el6.x86_64 #1 Inspur NF5280M4/YZMB-00689-101 May 23 18:36:31 CTL_AH_225_079 kernel: RIP: 0010:[<ffffffffa02aa510>] [<ffffffffa02aa510>] get_process_name+0x50/0x100 [dirpro] May 23 18:36:31 CTL_AH_225_079 kernel: RSP: 0018:ffff882067f97578 EFLAGS: 00000287 May 23 18:36:31 CTL_AH_225_079 kernel: RAX: 0000000000006afc RBX: ffff882067f975c8 RCX: ffff8820684e4040 May 23 18:36:31 CTL_AH_225_079 kernel: RDX: ffff880f903d4ab0 RSI: ffff882067f97c08 RDI: ffff882067f97c08 May 23 18:36:31 CTL_AH_225_079 kernel: RBP: ffffffff8156513e R08: 0000000000000001 R09: 0000000000006b00 May 23 18:36:31 CTL_AH_225_079 kernel: R10: ffffffffa02ae140 R11: 0000000000000246 R12: ffffffff8109a7ef May 23 18:36:31 CTL_AH_225_079 kernel: R13: ffff882067f97508 R14: 0000000000000000 R15: ffff882067f97518 May 23 18:36:31 CTL_AH_225_079 kernel: FS: 00007fd887fff700(0000) GS:ffff880060640000(0000) knlGS:0000000000000000 May 23 18:36:31 CTL_AH_225_079 kernel: CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033 May 23 18:36:31 CTL_AH_225_079 kernel: CR2: 00000000010fda88 CR3: 0000002066c76000 CR4: 00000000001607e0 May 23 18:36:31 CTL_AH_225_079 kernel: DR0: 0000000000000000 DR1: 0000000000000000 DR2: 0000000000000000 May 23 18:36:31 CTL_AH_225_079 kernel: DR3: 0000000000000000 DR6: 00000000fffe0ff0 DR7: 0000000000000400 May 23 18:36:31 CTL_AH_225_079 kernel: Process wsssr_defence_d (pid: 27392, threadinfo ffff882067f94000, task ffff8820684e4040) May 23 18:36:31 CTL_AH_225_079 kernel: Stack: May 23 18:36:31 CTL_AH_225_079 kernel: 6e696c657361626c 00000a6e61637365 ffff8810675944c0 0000000000000000 May 23 18:36:31 CTL_AH_225_079 kernel: <d> ffffc900075f5a50 ffff882067f97c08 0000000000000000 0000000000006afc May 23 18:36:31 CTL_AH_225_079 kernel: <d> 0000000000000000 0000000034e20113 ffff882067f97e48 ffffffffa02ae19c May 23 18:36:31 CTL_AH_225_079 kernel: Call Trace: May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffffa02ae19c>] ? new_sys_kill+0x5c/0x220 [dirpro] May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff811bcce7>] ? __d_lookup+0xa7/0x160 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff8120f78c>] ? task_dumpable+0x3c/0x60 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff81243b8c>] ? security_task_to_inode+0x1c/0x20 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff811b1522>] ? do_lookup+0xa2/0x230 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff811bd542>] ? dput+0x32/0x170 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff811b01d0>] ? path_to_nameidata+0x20/0x60 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff811c7d4f>] ? seq_open+0x4f/0xb0 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff81212940>] ? proc_single_show+0x0/0xa0 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff81212940>] ? proc_single_show+0x0/0xa0 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff811c7eaf>] ? single_open+0x6f/0xa0 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff811c4600>] ? mntput_no_expire+0x30/0x110 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff8120f690>] ? proc_single_open+0x0/0x50 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff8119e677>] ? __dentry_open+0x257/0x380 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff812448b5>] ? security_inode_permission+0x25/0x30 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff8119e8b4>] ? nameidata_to_filp+0x54/0x70 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff81156a90>] ? zone_statistics+0x70/0xc0 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff81141721>] ? get_page_from_freelist+0x3d1/0x870 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff811431d9>] ? __alloc_pages_nodemask+0x129/0x960 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff8119e37f>] ? do_sys_open+0xff/0x130 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff812af9b6>] ? vsnprintf+0x336/0x5f0 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff8114a830>] ? __lru_cache_add+0x40/0x80 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff8114a891>] ? lru_cache_add_lru+0x21/0x40 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff8116a59d>] ? page_add_new_anon_rmap+0x9d/0xf0 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff8115fcf1>] ? handle_pte_fault+0x581/0xc80 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff812a2f71>] ? cpumask_any_but+0x31/0x50 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff8105a708>] ? flush_tlb_page+0x48/0xb0 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff810594dd>] ? ptep_set_access_flags+0x6d/0x70 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffffa0194b0b>] ? get_hookfn+0x9b/0x1f0 [syshook_linux] May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffffa0195b8b>] ? release_context+0x20b/0x3ad0 [syshook_linux] May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff810b03a4>] ? hrtimer_nanosleep+0xc4/0x180 May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffffa0196ce6>] ? release_context+0x1366/0x3ad0 [syshook_linux] May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff81564357>] ? system_call_fastpath+0x35/0x3a May 23 18:36:31 CTL_AH_225_079 kernel: [<ffffffff8156427e>] ? system_call_after_swapgs+0xae/0x152

进一步查看日志,发现有crond进程长时间阻塞,共输出10次后不再输出了,对比内核代码这里默认的限制就是只输出10次。栈信息可以看到进程是在synchronize_sched时阻塞。之前的两篇文章1 、2 说明了sched rcu需要每个cpu都经历过调度或在时钟中断时处于用户态才算完成一次gp,sychronize_sched才会完成。由于cpu1处于dirpro模块的死循环中,不满足gp的完成条件,synchronize_sched就会阻塞,进程进入TASK_UNINTERRUPTIBLE状态,处于这个状态的进程会被计算进系统负载数,rcu的gp无法完成还会造成各种异常情况。

message 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 May 23 18:40:01 CTL_AH_225_079 kernel: INFO: task crond:28259 blocked for more than 120 seconds. May 23 18:40:01 CTL_AH_225_079 kernel: Tainted: G --L------------ 2.6.32-754.6.3.el6.x86_64 #1 May 23 18:40:01 CTL_AH_225_079 kernel: "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message. May 23 18:40:01 CTL_AH_225_079 kernel: crond D 000000000000001c 0 28259 22609 0x00000080 May 23 18:40:01 CTL_AH_225_079 kernel: ffff8820679bfce8 0000000000000082 ffff8820679bfcb0 ffff8820679bfcac May 23 18:40:01 CTL_AH_225_079 kernel: ffffffff81132b44 0000000000001d43 026bd5074c8df846 ffff880060658c00 May 23 18:40:01 CTL_AH_225_079 kernel: 0000002999d41b01 0000000000002dfe ffff88206582b068 ffff8820679bffd8 May 23 18:40:01 CTL_AH_225_079 kernel: Call Trace: May 23 18:40:01 CTL_AH_225_079 kernel: [<ffffffff81132b44>] ? file_read_actor+0x44/0x180 May 23 18:40:01 CTL_AH_225_079 kernel: [<ffffffff81559d05>] schedule_timeout+0x215/0x2f0 May 23 18:40:01 CTL_AH_225_079 kernel: [<ffffffff8134d725>] ? extract_entropy+0x125/0x1f0 May 23 18:40:01 CTL_AH_225_079 kernel: [<ffffffff81559963>] wait_for_common+0x123/0x180 May 23 18:40:01 CTL_AH_225_079 kernel: [<ffffffff81070890>] ? default_wake_function+0x0/0x20 May 23 18:40:01 CTL_AH_225_079 kernel: [<ffffffff81559a9d>] wait_for_completion+0x1d/0x20 May 23 18:40:01 CTL_AH_225_079 kernel: [<ffffffff810a6608>] synchronize_sched+0x58/0x60 May 23 18:40:01 CTL_AH_225_079 kernel: [<ffffffff810a6590>] ? wakeme_after_rcu+0x0/0x20 May 23 18:40:01 CTL_AH_225_079 kernel: [<ffffffff8123fb2c>] install_session_keyring_to_cred+0x6c/0xd0 May 23 18:40:01 CTL_AH_225_079 kernel: [<ffffffff8124388c>] ? security_prepare_creds+0x1c/0x20 May 23 18:40:01 CTL_AH_225_079 kernel: [<ffffffff8123fcce>] join_session_keyring+0x13e/0x170 May 23 18:40:01 CTL_AH_225_079 kernel: [<ffffffff810f2f97>] ? audit_syscall_entry+0x1d7/0x200 May 23 18:40:01 CTL_AH_225_079 kernel: [<ffffffff8123e7f8>] keyctl_join_session_keyring+0x38/0x70 May 23 18:40:01 CTL_AH_225_079 kernel: [<ffffffff8123f4a0>] sys_keyctl+0x180/0x1a0 May 23 18:40:01 CTL_AH_225_079 kernel: [<ffffffff81564357>] system_call_fastpath+0x35/0x3a May 23 18:40:01 CTL_AH_225_079 kernel: [<ffffffff8156427e>] ? system_call_after_swapgs+0xae/0x152

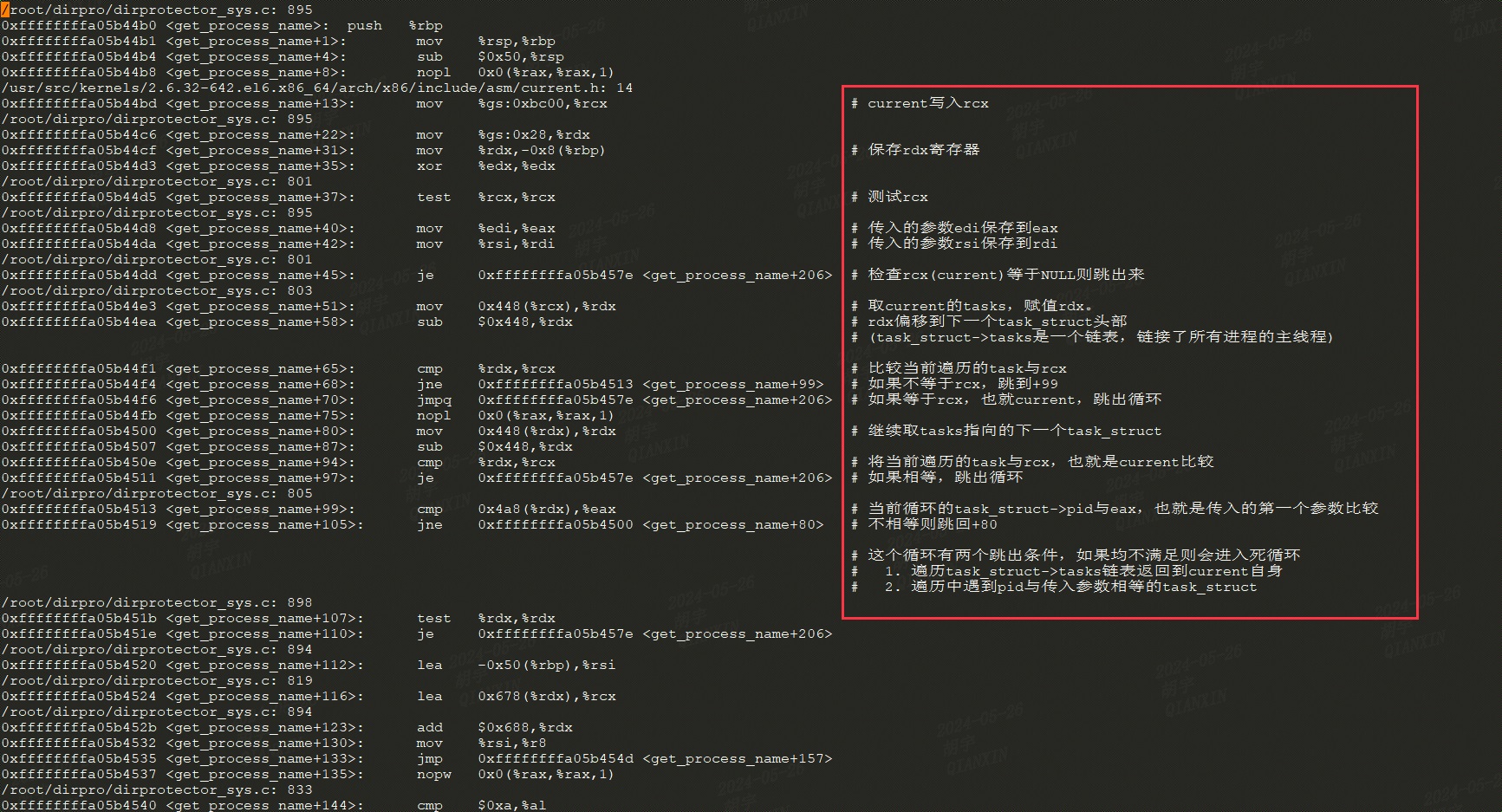

反汇编 之后进一步取到dirpro内核模块的文件,尝试反汇编。

可以得到一个死循环场景:

用户态程序启动一个子线程

子线程调用kill系统调用,pid是一个不存在的进程,或者说是一个已经退出的进程

复现 尝试复现该bug,新建一个同内核版本虚拟机,安装dirpro所属的客户端,查看dirpro加载成功。

配置内核panic参数

1 2 3 4 sysctl kernel.panic=10 sysctl kernel.hung_task_panic=1 sysctl kernel.softlockup_panic=1 sysctl kernel.nmi_watchdog=1

编译运行一个测试程序

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 #include <stdio.h> #include <stdlib.h> void * func (void *arg) while (1 ) { kill(11111 , 0 ); printf ("kill 11111 0\n" ); sleep(1 ); } } int main (int argc, char **argv) pthread_t t; pthread_create(&t, NULL , func, NULL ); while (1 ) { sleep(1 ); printf ("Main\n" ); } return 0 ; }

成功复现cpu死循环,内核自动panic后查看日志,与预期一致,而且只有dirpro这一个第三方内核模块存在。

vmcore-dmesg.txt 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 <0>BUG: soft lockup - CPU#1 stuck for 67s! [a.out:5114] <4>Modules linked in: dirpro(U) autofs4 8021q garp stp llc ipt_REJECT nf_conntrack_ipv4 nf_defrag_ipv4 iptable_filter ip_tables ip6t_REJECT nf_conntrack_ipv6 nf_defrag_ipv6 xt_state nf_conntrack ip6table_filter ip6_tables ib_ipoib rdma_ucm ib_ucm ib_uverbs ib_umad rdma_cm ib_cm iw_cm ib_sa ib_mad ib_core ib_addr ipv6 uinput microcode sg vmware_balloon vmxnet3 i2c_piix4 shpchp ext4 jbd2 mbcache sr_mod cdrom sd_mod crc_t10dif ahci vmw_pvscsi pata_acpi ata_generic ata_piix vmwgfx ttm drm_kms_helper drm i2c_core dm_mirror dm_region_hash dm_log dm_mod [last unloaded: speedstep_lib] <4>CPU 1 <4>Modules linked in: dirpro(U) autofs4 8021q garp stp llc ipt_REJECT nf_conntrack_ipv4 nf_defrag_ipv4 iptable_filter ip_tables ip6t_REJECT nf_conntrack_ipv6 nf_defrag_ipv6 xt_state nf_conntrack ip6table_filter ip6_tables ib_ipoib rdma_ucm ib_ucm ib_uverbs ib_umad rdma_cm ib_cm iw_cm ib_sa ib_mad ib_core ib_addr ipv6 uinput microcode sg vmware_balloon vmxnet3 i2c_piix4 shpchp ext4 jbd2 mbcache sr_mod cdrom sd_mod crc_t10dif ahci vmw_pvscsi pata_acpi ata_generic ata_piix vmwgfx ttm drm_kms_helper drm i2c_core dm_mirror dm_region_hash dm_log dm_mod [last unloaded: speedstep_lib] <4> <4>Pid: 5114, comm: a.out Not tainted 2.6.32-754.6.3.el6.x86_64 #1 VMware, Inc. VMware Virtual Platform/440BX Desktop Reference Platform <4>RIP: 0010:[<ffffffffa0486510>] [<ffffffffa0486510>] get_process_name+0x50/0x100 [dirpro] <4>RSP: 0018:ffff88013ba5b678 EFLAGS: 00000206 <4>RAX: 0000000000002b67 RBX: ffff88013ba5b6c8 RCX: ffff88013ba2cab0 <4>RDX: ffff88013da49520 RSI: ffff88013ba5bd08 RDI: ffff88013ba5bd08 <4>RBP: ffffffff8156513e R08: 00007f19724ee700 R09: 00007f19724ee700 <4>R10: 00007f19724edc20 R11: 0000000000000202 R12: ffffffff8124afd1 <4>R13: ffff88013ba5b658 R14: ffff88013ba5b608 R15: 0000000000000007 <4>FS: 00007f19724ee700(0000) GS:ffff880028280000(0000) knlGS:0000000000000000 <4>CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033 <4>CR2: 00007f2a3d3ec000 CR3: 000000013bd9e000 CR4: 00000000001607e0 <4>DR0: 0000000000000000 DR1: 0000000000000000 DR2: 0000000000000000 <4>DR3: 0000000000000000 DR6: 00000000ffff0ff0 DR7: 0000000000000400 <4>Process a.out (pid: 5114, threadinfo ffff88013ba58000, task ffff88013ba2cab0) <4>Stack: <4> 0000000000000000 0000000000000000 0000000000000000 ffff88013ba5b6b8 <4><d> ffffffff81156ab9 0000000000000001 ffff88013ddd79c0 ffff8800000235c0 <4><d> ffff88013ba5b7e8 000000004945a606 ffff88013ba5bf48 ffffffffa048a19c <4>Call Trace: <4> [<ffffffff81156ab9>] ? zone_statistics+0x99/0xc0 <4> [<ffffffffa048a19c>] ? new_sys_kill+0x5c/0x220 [dirpro] <4> [<ffffffff811431d9>] ? __alloc_pages_nodemask+0x129/0x960 <4> [<ffffffffa0489cb0>] ? new_open_f+0x0/0xe0 [dirpro] <4> [<ffffffff8119e677>] ? __dentry_open+0x257/0x380 <4> [<ffffffff8114a830>] ? __lru_cache_add+0x40/0x80 <4> [<ffffffff8114a891>] ? lru_cache_add_lru+0x21/0x40 <4> [<ffffffff8116a59d>] ? page_add_new_anon_rmap+0x9d/0xf0 <4> [<ffffffff8115fcf1>] ? handle_pte_fault+0x581/0xc80 <4> [<ffffffff812b255b>] ? strncpy_from_user+0x5b/0x90 <4> [<ffffffff81156ab9>] ? zone_statistics+0x99/0xc0 <4> [<ffffffff81141721>] ? get_page_from_freelist+0x3d1/0x870 <4> [<ffffffff811606f6>] ? handle_mm_fault+0x306/0x450 <4> [<ffffffff81054e64>] ? __do_page_fault+0x1f4/0x500 <4> [<ffffffff811431d9>] ? __alloc_pages_nodemask+0x129/0x960 <4> [<ffffffff8106860e>] ? account_entity_enqueue+0x7e/0x90 <4> [<ffffffff810786e2>] ? enqueue_entity+0x112/0x450 <4> [<ffffffff81063553>] ? set_next_buddy+0x43/0x50 <4> [<ffffffff810691d0>] ? check_preempt_wakeup+0x1c0/0x260 <4> [<ffffffff81078b1b>] ? enqueue_task_fair+0xfb/0x100 <4> [<ffffffff81063372>] ? check_preempt_curr+0x82/0xa0 <4> [<ffffffff810706f6>] ? try_to_wake_up+0x256/0x3f0 <4> [<ffffffff8106f6fe>] ? perf_event_task_sched_out+0x2e/0x70 <4> [<ffffffff81078153>] ? dequeue_entity+0x113/0x2e0 <4> [<ffffffff81564272>] ? system_call_after_swapgs+0xa2/0x152

bug原因 到这里可以确定是dirpro模块使用了错误的方式查找kill系统调用参数pid对应的task_struct实例,tasks链表连接从init_task开始的所有主线程,但是子线程的tasks成员只是复制了其父线程的值,在copy_process函数中可以看到,如果新创建的task_struct通过成员group_leader判断其不是主线程,则不会调用list_add_tail_rcu(&p->tasks, &init_task.tasks)将其链接到init_task.tasks链表中,这时子线程的tasks可以向前向后访问,但是其前后节点均指向子线程所属主线程的tasks。因此dirpro中使用的遍历方式,不会遍历回到current自身,又由于kill的目标pid已经退出,两个循环跳出条件均不会满足,因此进入了死循环。

如何正确查找task_struct 遍历所有主线程的task_struct在sched.h中有规范的使用方式如下

1 2 #define for_each_process(p) \ for (p = &init_task ; (p = next_task(p)) != &init_task ; )

如果需求是查找所有的task_struct,方式可以参考pid.c中实现

1 2 3 4 5 6 7 8 9 10 11 12 13 struct task_struct *find_task_by_pid_ns (pid_t nr, struct pid_namespace *ns) return pid_task(find_pid_ns(nr, ns), PIDTYPE_PID); } struct task_struct *find_task_by_vpid (pid_t vnr) return find_task_by_pid_ns(vnr, task_active_pid_ns(current)); }